Jason Ma

Hi there! I am a co-founder at Dyna, where we are building general and robust AI robots.

If you are interested in our work and mission, please reach out!

Previously, I completed my PhD at UPenn GRASP Laboratory.

My research interests are robot foundation models and reinforcement learning. Prior to Dyna, I have also spent time at Google DeepMind, NVIDIA AI, and Meta AI.

Selected honors:

- • RSS Pioneers, 2025

- • Apple Scholars in AI/ML PhD Fellowship, 2024

- • OpenAI Superalignment PhD Felowship, 2024

- • ICRA Best Paper Finalist in Robot Vision, 2024

- • NVIDIA Top 10 Research Project of Year, 2023

My research has pioneered new algorithms for training and leveraging foundation models from internet data to teach robots new tasks. Specifically, my research has

- • introduced a new paradigm of scaling large language models (LLMs) test-time compute to automate sim-to-real robot learning (ICLR 2024; NVIDIA Top 10 Research Project of Year 2023, RSS 2024, CORL 2024 Oral)

- • developed scalable and principled reinforcement learning algorithms that leverage human videos to learn visual rewards for self-improving robotic manipulation (ICLR 2023 Spotlight, ICRA 2024 Best Paper Finalist in Robot Vision; CORL 2023 LEAP Workshop Best Paper, ICRA 2025)

- • demonstrated how to best combine multi-modal learning and RL to train and utilize vision-language models (VLMs) for universal value functions (ICML 2023, ICLR 2025 Spotlight)

Google Scholar Github LinkedIn Twitter

yechengma at gmail dot com

(* indicates equal contribution, † indicates equal advising)

Vision-Language Models are In-Context Value Learners

Jason Ma*, Joey Hejna, Ayzaan Wahid, Chuyuan Fu, Dhruv Shah, Jacky Liang, Zhuo Xu, Sean Kirmani, Peng Xu, Danny Driess, Ted Xiao, Jonathan Tompson, Osbert Bastani, Dinesh Jayaraman, Wenhao Yu, Tingnan Zhang, Dorsa Sadigh, Fei Xia

International Conference on Learning Representations (ICLR), 2025 (Spotlight)

Webpage •

Arxiv •

Articulate-Anything: Automatic Modeling of Articulated Objects via Vision-Language Models

Long Le, Jason Xie, William Liang, Hung-Ju Wang, Yue Yang, Jason Ma, Kyle Vedder, Arjun Krishna, Dinesh Jayaraman, Eric Eaton

International Conference on Learning Representations (ICLR), 2025

Webpage •

Arxiv •

Code

Eurekaverse: Environment Curriculum Generation via Large Language Models

William Liang, Sam Wang, Hungju Wang, Osbert Bastani, Dinesh Jayaraman†, Jason Ma†

Conference on Robot Learning (CoRL) (Oral) , 2024

Webpage •

Arxiv •

Code

On-Robot Reinforcement Learning with Goal-Contrastive Rewards

Ondrej Biza, Thomas Weng, Lingfeng Sun, Karl Schmeckpeper, Tarik Kelestemur, Jason Ma†, Robert Platt†, Jan-Willem van de Meent†, Lawson L. S. Wong†

International Conference on Robotics and Automation (ICRA), 2025

Arxiv •

DrEureka: Language Model Guided Sim-To-Real Transfer

Jason Ma*, William Liang*, Hungju Wang, Sam Wang, Yuke Zhu, Linxi "Jim" Fan, Osbert Bastani, Dinesh Jayaraman

Robotics: Science and Systems (RSS), 2024

Webpage •

Arxiv •

Code

Eureka: Human-Level Reward Design via Coding Large Language Models

Jason Ma, William Liang, Guanzhi Wang, De-An Huang, Osbert Bastani, Dinesh Jayaraman, Yuke Zhu, Linxi "Jim" Fan†, Anima Anandkumar†

International Conference on Learning Representations (ICLR), 2024

NVIDIA Top 10 Research Projects of 2023

Webpage •

Arxiv •

Code

Universal Visual Decomposer: Long-Horizon Manipulation Made Easy

Charles Zhang*, Yunshuang Li*, Osbert Bastani, Abhishek Gupta, Dinesh Jayaraman, Jason Ma†, Lucas Weihs†

International Conference on Robotics and Automation (ICRA) (Best Paper Finalist) , 2024

Best Paper Award, CORL 2023 LEAP Workshop

Webpage •

Arxiv •

Code

LIV: Language-Image Representations and Rewards for Robotic Control

Jason Ma, Vikash Kumar, Amy Zhang, Osbert Bastani, Dinesh Jayaraman

International Conference on Machine Learning (ICML), 2023

Webpage •

Arxiv •

Code

VIP: Towards Universal Visual Reward and Representation via Value-Implicit Pre-Training

Jason Ma, Shagun Sodhani, Dinesh Jayaraman, Osbert Bastani, Vikash Kumar†, Amy Zhang†

International Conference on Learning Representations (ICLR) (Spotlight) , 2023

Best Paper Finalist, NeurIPS 2022 Deep RL Workshop

Webpage •

Arxiv •

Code

How Far I'll Go: Offline Goal-Conditioned RL via F-Advantage Regression

Jason Ma, Jason Yan, Dinesh Jayaraman, Osbert Bastani

Neural Information Processing Systems (NeurIPS) (Nominated for Outstanding Paper) , 2022

Webpage •

Arxiv •

Code

TOM: Learning Policy-Aware Models for MBRL via Transition Occupancy Matching

Jason Ma*, Kausik Sivakumar*, Jason Yan, Osbert Bastani, Dinesh Jayaraman

Learning for Decision and Control (L4DC), 2023

Webpage •

Arxiv •

Code

SMODICE: Versatile Offline Imitation from Observations and Examples

Jason Ma, Andrew Shen, Dinesh Jayaraman, Osbert Bastani

International Conference on Machine Learning (ICML), 2022

Webpage •

Arxiv •

Code

CAP: Conservative and Adaptive Penalty for Model-Based Safe Reinforcement Learning

Jason Ma*, Andrew Shen*, Osbert Bastani, Dinesh Jayaraman

Association for the Advancement of Artificial Intelligence (AAAI), 2022

Arxiv •

Code

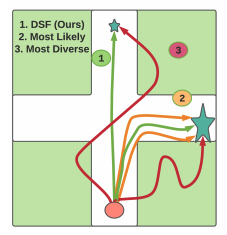

Likelihood-Based Diverse Sampling for Trajectory Forecasting

Jason Ma, Jeevana Priya Inala, Dinesh Jayaraman, Osbert Bastani

International Conference on Computer Vision (ICCV), 2021

Arxiv •

Code

Conservative Offline Distributional Reinforcement Learning

Jason Ma, Dinesh Jayaraman, Osbert Bastani

Neural Information Processing Systems (NeurIPS), 2021

Arxiv •

Code

2025

UC Berkeley, December 20252024

MIT Embodied Intelligence Seminar2023

MIT IAI Lab2022

University of Edinburgh RL Seminar2024

Co-Organizer, RSS Workshop on Task Specification for General-Purpose Intelligent Robots2023

Co-Organizer, NeurIPS Workshop on Goal-Conditioned Reinforcement Learning2023

Co-Organizer, GRASP Student, Faculty, and Industry (SFI) Seminar2021+

Reviewer, NeurIPS, ICML, ICLR, AAAI, ICRA, IROS, RA-L, CORLPast

William Liang (now PhD at UC Berkeley)